DistillerSR users often cite the “human factor” in systematic reviews as one of the major challenges that the software helps them mitigate. And when you have multiple humans with differing experience and knowledge screening references, the potential for conflict – and errors – can be significant. Starting your study off right with a reviewer calibration exercise will quickly get everyone on the same page, resulting in a measurably better review.

Let’s look at how you can use DistillerSR to make sure your reviewers are screening in a consistent fashion. A few years ago, six members of our team participated in a systematic review being conducted by one of our institutional clients, which started with each screener looking at the same 100 references and making a decision to include or exclude them from the review. Once everyone had gone through the assigned references and recorded their decisions, it was time to see how we did.

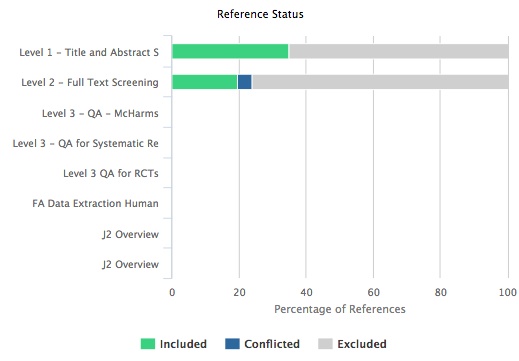

Several of DistillerSR’s built in reports can be used to determine if the reviewers are more or less on the same page. When you log in, the Dashboard shows you immediately how many references survived the first level screening. This, in itself, can be enlightening, as one of our reviewers on the project observed:

“I have to admit, I was initially surprised to see that about 35% of references had made it to the next level (the green segment in the chart above). Then I realized that this screening level had been configured to require six reviewers to have consensus exclude for a reference to be excluded (remember, this was still just a training exercise). Given that, 65% consensus exclude seemed pretty good.”

Inter-rater Reliability Scores

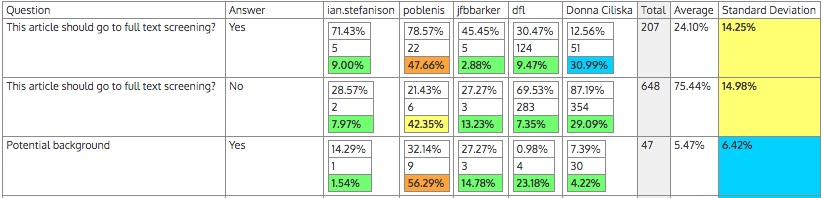

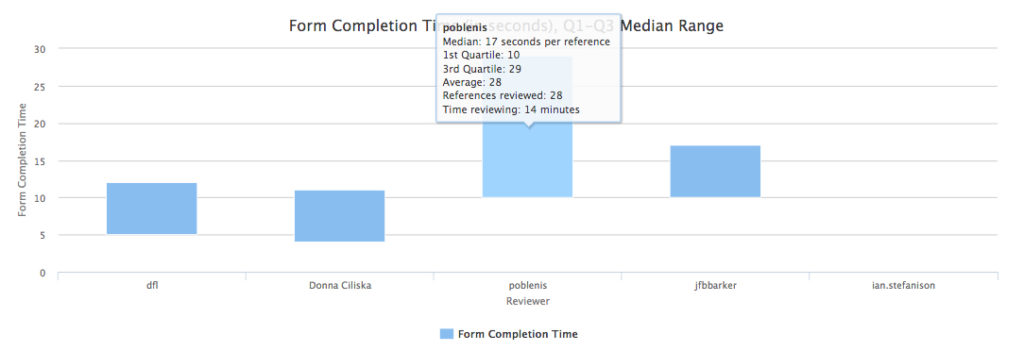

The DistillerSR Kappa report gives you more detail about how consistent your reviewers are. In this review, the DistillerSR Inc. team discovered they weren’t quite on the same wavelength in their initial screening decisions. To delve a little deeper into the inconsistencies, you can run the Statistical report to find out how many references each person included or excluded, and the User Metrics report to see how much time each reviewer took to complete each screening form. These metrics can help alert you to possible quality issues if reviewers are going too quickly, and estimate roughly how long it will take to complete the entire screening process.

“When I looked at the Statistics report, I could see that I was including more studies that any of my fellow reviewers. I got the feeling that I was being too lenient on the study design and patient population. My primary confusion was around whether or not to include papers where the desired patient population was used in part of the study, but not in the whole study. It seemed to me that that there was still value to be extracted if a subset of the study used the right patients. I’d need to discuss this in our team call next week.”

“The form completion time report helped us to identify reviewers who may be struggling with the material and therefore taking longer to get through each abstract.”

Reviewer Calibration at Full Document Screening

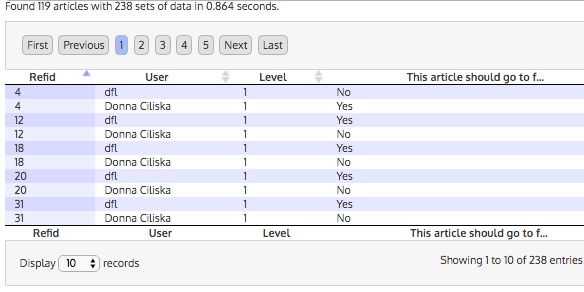

Reviewer calibration is most effective when you analyze how the reviewers handle Full Text Screening in addition to Title and Abstract Screening. When the DistillerSR Inc. team got to this point in the project, more reviewer inconsistencies appeared:

DistillerSR’s Conflict report is ideal for identifying where disagreements exist between reviewers for each reference.

“We went through each reference in the disagreement report and asked the reviewer who was most out of sync to explain how they arrived at their answers. This was also a great opportunity to discuss how to best interpret the questions and how to most effectively read the references.

The entire session took about an hour with participants Skyping in from 5 different locations. We now felt confident that we were more or less on the same page as screeners. We were ready to start screening the rest of the references in the study.”

Off To a Good Start

Reviewer calibration may add a little extra time at the start of your review, but it can be accomplished quickly and easily with the help of DistillerSR’s configuration and reporting tools. You’ll be able to address inconsistencies, answer reviewer questions, and refine your forms before proceeding with the full review. Getting everyone on the same page at the beginning can go a long way towards an overall faster, smoother, and higher quality review.