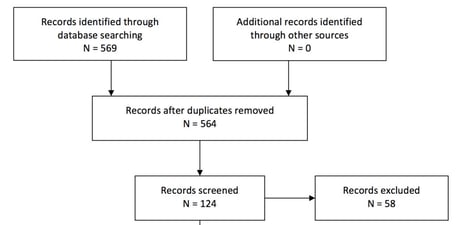

Once you have completed your initial reference search, the first step in a systematic review is to screen your search results by title and abstract to weed out the ones that obviously don’t belong in your review. This is typically done either by a single screener, or using dual screeners and checking that both screeners agree on the inclusion/exclusion decision.

In the “old days”, reviewers might simply sort abstracts into two piles – included and excluded – or record their lists of included/excluded references in a spreadsheet, often without recording the reasons for the exclusion. Today, many groups use software tools that manage the screening process and capture reviewers’ inclusion exclusion decisions in a database.

But even with tools to facilitate the process, many groups still do not capture reasons for exclusion at the title and abstract screening stage of their reviews.

You are excluding that reference for a reason. Why not capture that reason?

Here is something to consider; if you exclude a reference without recording a reason, do you really have a transparent and reproducible process? Presumably, there are many reasons for excluding an abstract. If the reason is not recorded, someone revisiting that decision in the future will have to reengineer the exclusion decision (e.g. “Our peer reviewers asked why we didn’t include the Bianchia paper. Do you remember why we excluded that one?”). Similarly, if we are dual screening, how do we know that both screeners found the same reason for excluding a paper? The answer: we don’t.

To my mind, the rationale for capturing exclusion reasons during screening is persuasive enough from a process transparency perspective. That said, there is another compelling argument for doing so.

Capture expertly coded data as a byproduct of the screening process

We hear a lot today about the overabundance of literature that is being constantly generated and how it is becoming increasingly difficult for researchers to keep up with the flood of new evidence. One of the reasons that this problem exists is that the literature being published is still largely in unstructured text that is difficult to search with any degree of precision. The result is massive amounts of material returned from searches that then has to be screened by humans. It’s a huge problem.

So, when I’m screening 6,000 abstracts for relevance and I exclude 138 of them because they were post mortem studies, why am I not recording this information? If I did, I would never have to screen those references again for any review requiring living subjects. If I didn’t, I just had a smart human review the abstract and extract some important information about it; then I tossed that data away.

Smart human researchers are screening hundreds of thousands of references every day. If they were to record their reasons for exclusion and bind these to the references, they would be creating coded references that could be searched with dramatically increased precision, potentially reducing the screening load for future reviews.

Why wouldn’t you do it?

About half of the review groups that I work with capture the reasons for exclusion for each reference while they are doing title and abstract screening. This not only allows them to accurately report on what references were excluded, and why; it also means they are capturing valuable, reusable meta-data that can be used in more accurate searching, sorting and analysis going forward. It costs almost nothing to do; why wouldn’t you do it?